As a virtualisation professional , there seems an almost limitless choice of 3rd party software you can bolt into your environment. While VMware covers many of the bases with its own product lines in capacity planning , lifecycle management & reporting , some of them are missing a feature or two , or just too complex for your environment. Many vendors seek to address this problem with a multi product offering , but so far, I’ve only come across a single vendor who aim to address issues like these with a single product.

I spoke with Jason Cowie & Colin Jack from Embotics a few months ago , but was only able to secure a product demo last week – In some ways I wish I’d waited until the next release as it sounds like its going to be packed with some interesting features. I don’t really like blogging about what is “coming up in the next version” , so will be concentrating on what you can get today ( or in a couple of cases the minor release due any time ). This isn’t something specifically levelled at the Embotics guys who are most likely internally submersed in the “vnext” code so to them it is the current product.As an architect ,I’m just as guilty of evangelising about features of a product that is several months away form a deployment. Many vendors do the same to whip up interest around the product ( hyper-v R2 is a great example of this ) , but it doesn’t really show a level playing field to compare a roadmap item with an item that’s on the shelves today. When the 4.0 version of V-Commander is released , I look forward to seeing all of the mentioned features for myself !

So What is it ?

The Website really does define the V-Commander product as being all things to all men , that is to say if those men are into Virtualisation management ! They show how the Product can be used to help with : Capacity Management , Change Management , Chargeback and IT Costing , Configuration Management , Lifecycle Management , Performance Management and Self Service.

That’s a lot of strings to its bow – and certainly enough to make you wonder if its a jack of all trades, master of none type product. After a good look at the offering , I can safely say that’s not the case , but its defiantly stronger in some of those fields than others.

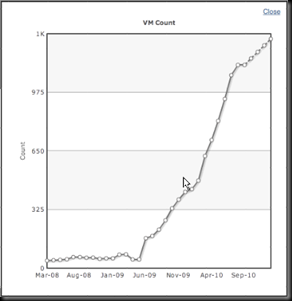

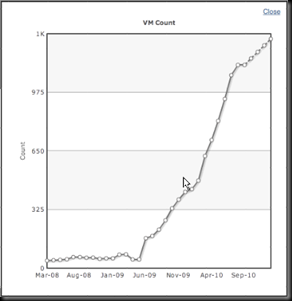

The “Secret Sauce” of the V-Commander product is its policy engine. Policies drive almost every facet of the product and they are what allows it to be as flexible as it is. Once connected to one or more vCenters , it will start gathering information right away. This is what they refer to as “0-Day Analysis” , For a large environment , the information gathering cycle for some capacity management products can take quite some time ( I’ve seen up to 36 Hours) as the appliance tries to pull some pretty granular information from vCenter. I wasn’t able to run the Embotics product against a large environment to see if this is the case.However, I have it from the Embotics guys as an example, that to pull the information for 30 months of operation for a vCenter with 1200 machines took a couple of hours ;to me this is more than acceptable.The headline report that Embotics shows off as being a fast one to generate is one showing the number of deployed VM’s over time , which is a handy way of illustrating potential sprawl.

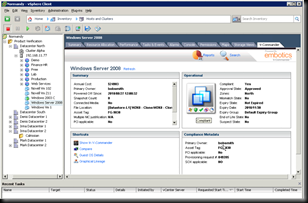

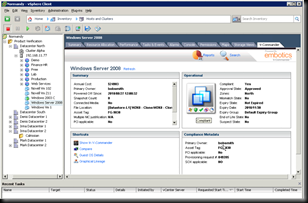

The next key thing that V-Commander does is provide some more flexible metadata about a virtual machine. Entry of this data can be enforced by policy , for example you might want to say that all machines must have an end of use or set review date before they can be deployed. This really enforces the mantra of a cradle to grave lifecycle management application. The VM is tracked form its provision , through its working life and finally during the decommission phase. Virtual Machine templates can be tracked in the same way as Machines themselves – this sounds like an appealing way of ensuring you are not trying to deploy a machine from an old template. What is interesting is that the Metadata for an object can come in from other 3rd parties so there is potential to track Patching / Antivirus , so the appropriate integration be available.

![f09cb019-7f5b-4171-ae39-588eaadc1429[6] f09cb019-7f5b-4171-ae39-588eaadc1429[6]](http://jfvi.co.uk/wp-content/uploads/2010/11/f09cb0197f5b4171ae39588eaadc14296_thumb.png)

Policy enforcement is real time, so for example even if I attempted to power on a VM via an rCLI command that V-commander policies would not allow to be powered on , the product is fast enough to power it back off again before it left the BIOS. In addition to this an alert would be generated of the rogue activity.

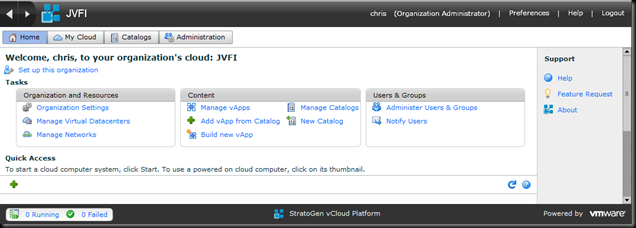

The Web GUI of the product splits into 2 main views – in addition to the administrators view There is also a “self service portal” – I put this in quotes for the very good reason that there are other self service portals that have currently hit the market which are more self provisioning. At this point on time the product does not provide self provisioning , but it is thought to be high priority for the 4.0 release. That the portal does allow is a very fine grained control that could be passed directly to VM owners without requiring any underlying access to vCenter , which is a feature that has some legs. They can currently request a machine , complete metadata and manage that specific groups of machines within an easy to use interface.

It is also possible to pull the data from V-Commander into the VI Client via a plugin to VI – this is defiantly aimed at the administrator rather than the VM Owner.

Automation is the key here and there are many issues where the product highlights that very well. While there is a degree of automation currently within the product , I think the next version will sink or swim on how well that ability is provisioned. For example , when it comes to Rightsizing a virtual machine , identifying those machines that may need a CPU adding or removing is great, being able to update the hardware on those machines automatically is what would actually get used , particularly in a large environment. Smaller shops may have a better “gut feeling” on their VM’s, hence will quite possibly manually tune the workloads more often. The product doesn’t have a whole lot in terms of analytics of virtual machine performance – the capacity management policies are pretty simple metrics at the moment, its certainly another area for potential growth to put that policy based automation engine to use.

V-Commander is slated to support Hyper-V in the 3.7 release , which is out any time now. I shall be interested to see how it will interact with the Self Service Portal in the upcoming versions of SC:VMM. From what I’ve seen of the product it could sit quite neatly behind the scenes of your <insert self service portal product here> and provide some of the policy based lifecycle management – all it would need would be a hook in from that front end so that those policies can be selected accordingly.

You get a lot of product for your money – which depending on how you want to spend it could cost you a fixed fee + maintenance , or an annual “rental” fee. I’ve been weighing up the pro’s and con’s of each licensing model and it would look like the subscription based model is the easier one to justify. It also means that should there be a significant change in the way you run your infrastructure , you wont be left holding licences that you’ve paid for , but can’t really use.

So is this the only Management software you’ll ever need ? At the moment, no it isn’t. That said its got some really strong features which aligned with a good service management strategy could help align your virtual infrastructure with the rest of your business.

Nb. I’ve just had some clarification on the release schedule for hyper-v support.

“Given priorities and customer feedback (lower

than expected adoption rates of Hyper-V), we decided to do only an internal release of Hyper-V (Alpha) with 3.7 (basic plumbing), with a GA version of Hyper-V coming in the first half of 2011. At the beginning of 2011 we will begin working with early adopters

on beta testing.”

If you have a hyper-v environment and would like to take advantage of the embotics product , I’m sure they would be keen to hear from you.

Note that the Management network ( as I’ve called it ) is a vApp specific network rather than an organisation wide one, hence why I still have an internal network connection to the VM so that it can talk to other VM’s with the VDC. The firewall VM I configured earlier is organisation wide , so any machine in the VDC could be publish via it. For larger deployments I wonder if it would make sense ( although its not really within the spirit of “the cloud” ) to use hardware devices for edge networking – for example an f5 load balancer. While they do have a VM available which would offer a per vApp LTM instance , some shops may want the functionality of the physical hardware ( for example SSL offload ) . There may also be licence considerations when it comes to deploying the edge layer as multiple virtual instances.

Note that the Management network ( as I’ve called it ) is a vApp specific network rather than an organisation wide one, hence why I still have an internal network connection to the VM so that it can talk to other VM’s with the VDC. The firewall VM I configured earlier is organisation wide , so any machine in the VDC could be publish via it. For larger deployments I wonder if it would make sense ( although its not really within the spirit of “the cloud” ) to use hardware devices for edge networking – for example an f5 load balancer. While they do have a VM available which would offer a per vApp LTM instance , some shops may want the functionality of the physical hardware ( for example SSL offload ) . There may also be licence considerations when it comes to deploying the edge layer as multiple virtual instances.

![f09cb019-7f5b-4171-ae39-588eaadc1429[6] f09cb019-7f5b-4171-ae39-588eaadc1429[6]](http://jfvi.co.uk/wp-content/uploads/2010/11/f09cb0197f5b4171ae39588eaadc14296_thumb.png)

LinkedIn

LinkedIn Twitter

Twitter