This post is a shameless bit of promotion for 3 Parties:

Me: I’m enjoying blogging and get a little warm fuzzy feeling every time I open up Google analytics and see the graph going up 🙂

Veeam: Veeam have launched a free version of one of their leading product lines , and they are keen for people to know it , try and and possibly one day upgrade to the Enterprise version

The International Foundation of Red Cross and Red Crescent Societies: Veeam have launched Reporter Free Edition as part of their “Totally Transparent Blogging competition” – The prize for which is not something shiny from apple , EMC or Veeam themselves , but a $1000 charity gift card. As one of the first 15 Entrants I’ve already “won” $25 for the IRFC , but if this post ( and the endless nagging via Twitter , MSN , Forums & Email ) persuades enough people to download the free product then I win, by which I mean the IFRC win !

Right , shameless plugs over and down with , its down to the product that I think you should at least spend 90Mb of your plentiful bandwidth downloading ( for those on a mobile browser, I’ll let you off , but for every other JFVI reader , there is no excuse ! )

ok , really , that’s it with the plugs ! I promise the rest of the post will be nothing but a slice of technical fried gold.

I’ve evaluated a number of free products and plugins for the Virtual Infrastructure , but Veeam Reporter 4.0 seem to be the most polished and for a free product the limitations still leave you with something to add value to your infrastructure. After downloading the install file , you’ll need to find a suitable box to run it on, I used a spare Vm that had been servicing VMware update manager , its reasonably well specced for a Vm but not especially busy so an ideal candidate.

Reporter does require a few more pieces of the puzzle then just the install , so you’ll also need to hand either a SQL box with a little space on it, or the SQL Express Advanced edition , complete with SQL reporting Services 2008 – using SRSS really turbocharges the functionality of the reporting product , especially within a Windows shop who may well have an SRSS instance already – integration of reports generated into a SharePoint instance is also a synch. I initially ran the product without the SRSS integration which while it is a still a powerful tool to generate offline reports of your infrastructure in Excel , Acrobat , Word and Visio formats , you really only get the full functionality from the product by using Reporting Services. You’ll also need .net framework version 3 & IIS with Windows Authentication enabled.

Although you do get some things for nothing , you don’t quite get everything , Veeam have a document up , highlighting the difference between the free & paid versions here.

http://www.veeam.com/veeam_reporter_free_edition_wn.pdf

To summarise , you only get 24 hours of change management reporting , slightly limited offline reports & no Powershell or Capacity Management functionality , but should you decide you can’t live without the features , they are only a licence key away!

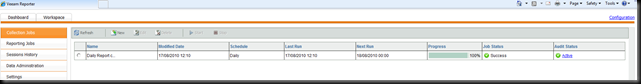

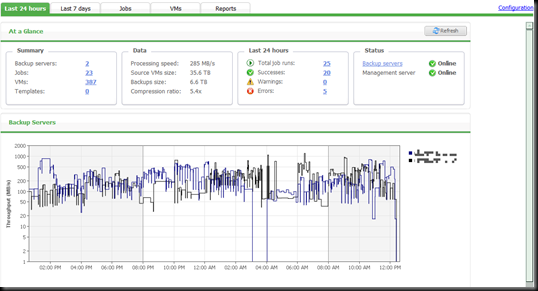

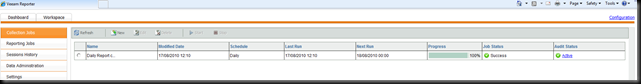

Access to the application is via a web front end , this allows you to configure data collection jobs so that you control the load against vCenter , it also allows you to configure some regular reporting jobs. Integration with Veeam’s FREE Business View product is also built in so that you can look at your environment from more than just the view offered by vCenter.

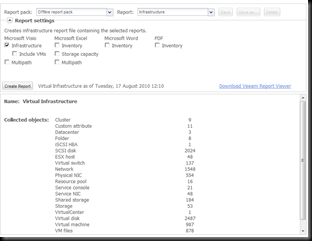

Data Collection Screen

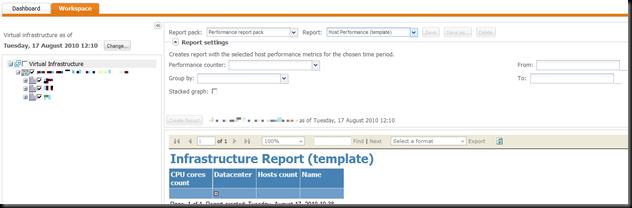

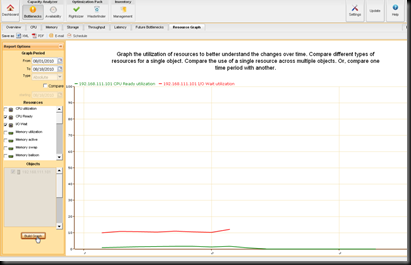

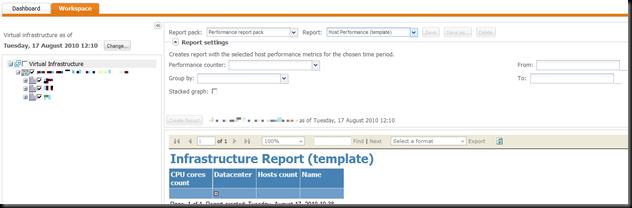

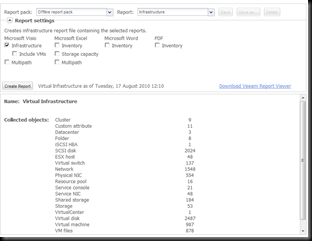

Once you have some data stored , you can move to the workspace tab and generate some reports with it. The left hand side shows your current tree view ( be it infrastructure or business view ) and the right had side has a series of drop downs to help you build your report. In the free version you are limited to the pre-canned reports provided by Veeam , however should you purchase the licensed version , it is possible to create custom reports based on the data collected.

Report Workspace Screen

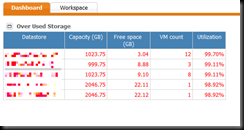

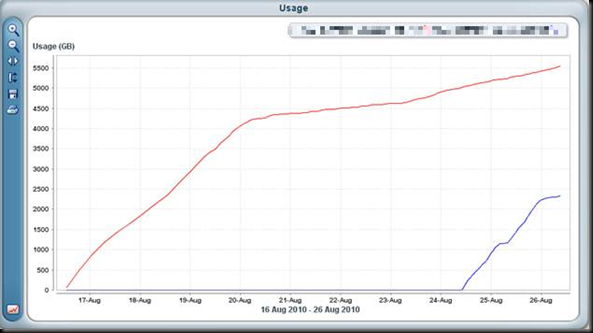

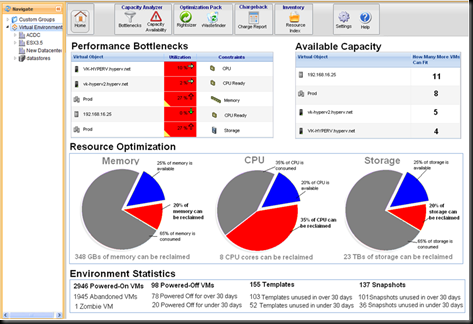

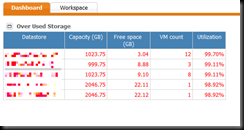

You can add a key report to the Front screen dashboard , it’ll be updated as soon as data collection is finished , though you are limited to just the 1 Widget in the free version. I chose datastores over capacity.

Dashboard

one of the main reason the free version of reporter interested me as was a documentation tool. I was recently asked to document a large portion of my virtual infrastructure , and felt that being able to export the maps from virtual center into Visio in a format I could modify would have made my life a lot easier. In Reporter , this is done using the offline report pack. You’ll need to be on a workstation with the office tools you need installed , and also install the Veeam Report viewer , of which a handy link is provided on the offline reporting workspace.

Build your report in a similar way to the SSRS reports , but when you run it , you’ll be able to download the complete report file , which contains all the elements you selected.

Offline Reporting Workspace

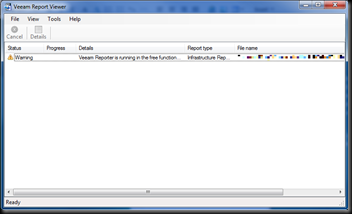

Report Viewer Interface

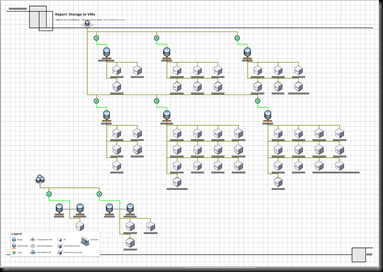

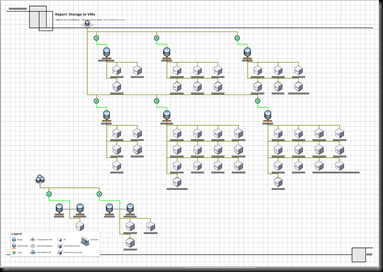

in the example above I’ve just gone for a simple infrastructure report of a 4 node cluster. This opened up a couple of Visio drawings. One of which I’ve shown below – a report of Storage allocated to VM’s

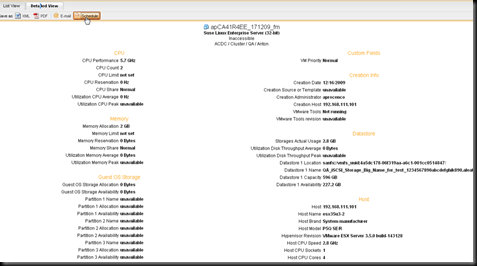

And its not just a pretty picture either , each of the objects has a full set of the data attributes for that object from annotation to UUID – I lost count at 100 of them.

For smaller infrastructures , Veeam bundle the reporting product along with Monitor and their excellent backup and replication software as part of the Veeam Essentials Package.

I’ll finish off now with another shameless plug. This is a great free product for putting some initial documentation together along with a great set of reports that you’ll want to check every day. So what are you waiting for , please click the link below, download the product and help me donate $1000 to a worthy cause.

http://www.veeam.com/reporter-free-promo/jfvi.co.uk

WordPress Tags: Manage,environment,Help,Veeam,Reporter,Edition,promotion,Parties,Google,graph,version,product,Enterprise,International,Foundation,Cross,Crescent,Societies,Free,Transparent,competition,gift,card,Entrants,IRFC,Twitter,Forums,Email,IFRC,JFVI,reader,products,Virtual,Infrastructure,limitations,VMware,manager,candidate,Services,SRSS,Windows,instance,integration,SharePoint,tool,Excel,Acrobat,Word,Visio,framework,Authentication,Although,difference,versions,hours,management,Powershell,data,collection,jobs,View,Once,workspace,tree,series,custom,Report,Front,dashboard,Widget,documentation,life,workstation,office,tools,viewer,Build,SSRS,Offline,Interface,example,node,drawings,Storage,annotation,UUID,infrastructures,Monitor,replication,Essentials,Package,promo,vCenter

LinkedIn

LinkedIn Twitter

Twitter