![Centrix Software [LOGO]](http://www.centrixsoftware.com/wp-content/themes/centrixsoftware/images/centrix_logo.gif)

I was invited to a call with Rob Tribe from Centrix software last week – following advice from Marketing Guru & London VMUG ring-mistress Jane Rimmer for vendors to get in touch with bloggers – fine advice if you ask me 🙂

I was aware of the existence of Centrix , having seen their booth at VMWorld Europe, but sorry to say I didn’t get a chance to speak to them there , so aside from a brief look at their site , it was something new.

Starting off with a brief look at how the company came to be, which was that it grew from a number of VMware staff who recognised the challenges from taking the same approach to a VDI implementation as a server consolidation project. When an application is installed on a server , there is a fairly good chance that its going to be used. When you have a VDI image with *every* application that’s been put into the requirement specification for that desktop golden image , its going to a) get quite bloated b) require some considerable licensing c) possibly not get all that heavily utilised.

Knowing what is current deployed in your environment is not a new concept by any means. There are armfuls of packages available to collect an inventory from your clients , ranging from the free to the costly and over many different platforms. To get the best from an inventory , many of these applications will deploy an agent to the endpoint in question.

Centrix can deploy its own agent , or take a feed from your existing systems management package and apply some nifty analytics to it in order to give you a more accurate picture of the environment. Currently the key metric in this would be executable start and stop times. This of course will give you the best kind of data when your environment is rich in fat clients or installed software and will give you a true meter of not only total usage , but at what times of day those products are used, thus enabling you to build up a map of utilisation across your estate.

This kind of information would be of great help when planning your VDI environment. Not only are you going to be able to know about the concurrency of the current landscape , but which applications are most frequently used ? Planning which applications should be a core part of your master images and which ones can be deployed via an application virtualisation layer would be made a lot smoother.

Having given an overview of the product, I was looking forward to seeing a bit more under the hood as to how this “workspace intelligence” was achieved.

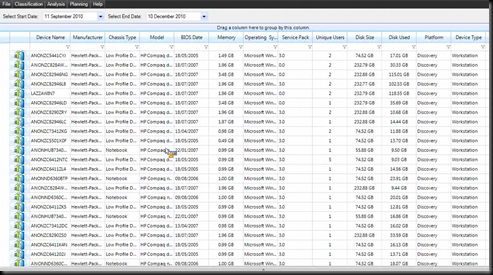

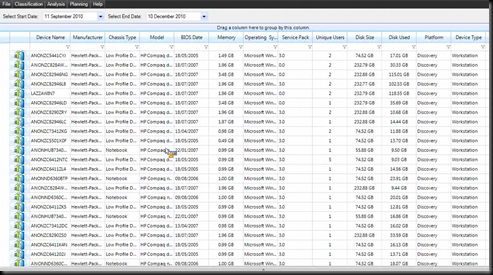

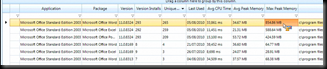

Rob started with the Raw data as shown below – this isn’t anything massively new to write home about , but its interesting to know that the BIOS date of a physical machine is considered a reasonable metric for the age of the deployment , given few machines would have a bios upgrade after they have been deployed to a user.

The unique users field is also an indication of boxes that have not been logged into during a given monitoring period. This data would be of less importance for servers , after all if a server has remained functional without anyone having to log into it , then its defiantly a good thing ! An unused workstation could be put onto a watch list of systems to decommission perhaps ?

For Each device you can drill down to further information , picking up installed hardware and other common metrics.

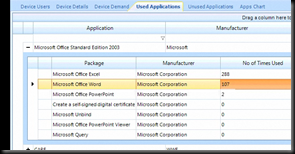

So that’s the raw data , how do you go about presenting it ? The example graph below shoes the numbers of applications a given workstation is running over the capture period.

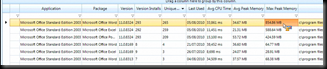

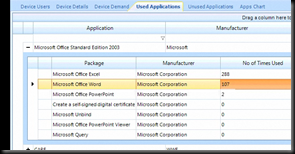

We can look at this data in a number of ways to help build up our application “map” . For example , looking at some metric of MS Office utilisation…

Based on the above data , would you deploy PowerPoint to your VDI base image ? The number of times an application is opened doesn’t give you much of a picture of how heavy hitting that application is. For this metric , Centrix have elected to pick Avg CPU time ( rather than % utilisation – given the heterogeneous nature of CPU across the estate )

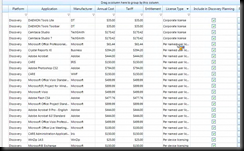

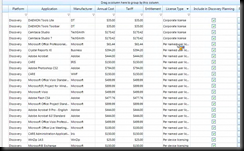

In addition to looking at the software utilisation over your workstations , the same details can be used to help with licence management. Rob was clear to point out that Centrix isn’t a licence management product , but could certainly help make some decisions around the deployment of per-instance licensed software.

With the main features of the product suitably demonstrated, we talked a little around the product itself. It is a regular 2 Tier application, running on windows with a Microsoft SQL Back-End. We didn’t have too much time to talk around the scaling of the product up to an enterprise space , but I’d like to see how it would cope with estates of over 40 or 50,000 workstations.

The agent itself is pretty streamlined – they have managed to get it down to a 500k install , which seems small enough in footprint , though I’d like to see what the footprint of an agent actively collecting would be on a workstations resources.

“Out of the box” – the product ships with 40 different reports on the raw data , to enable you to pull out the common detail with ease. While I don’t generally harp on too much about vnext features , the version 5 of the product due for launch early in the new year will feature a community reporting portal , hopefully along the lines of Thwack! the Portal from Solarwinds that enables Content exchange between users of the Orion products.

I think the product is quite niche & and sell of its features to budget holders isn’t necessarily around the technology / cost side of the product ( approx real cost of £20 / desktop ) but around the compliance / political side – Stakeholders have a tendency to object to user management via IT & and perceived “Big Brother” feeling that inventory / software metering agents tend to invoke with a user population.

I look forward to seeing how the product develops , with v5 proposed to go beyond analysis of thick client usage , into looking at how thin client application access via a browser are used. Matching this kind of data up with traditional monitoring data from the application backend would be most interesting.

![Centrix Software [LOGO]](http://www.centrixsoftware.com/wp-content/themes/centrixsoftware/images/centrix_logo.gif)

LinkedIn

LinkedIn Twitter

Twitter