If there is one thing that all VMware monitoring solutions have in common , it is a connection to vCenter. The vCenter Web Service is a single point of contact between the outside world and the key performance API’s. What you do with that data once you have collected it varies from application to application , but they will all make that underlying connection to vCenter.

This puts vCenter into a bit of a special position within the infrastructure , namely a Single Point of Failure (SPoF) As the ecosystem has grown up around vSphere , admins have become more and more reliant on vCenter – their backups depend on it , their monitoring depends on it and in some cases their orchestration layer depends on it. That’s a serious number of dependencies to have on a single box. Protecting vCenter from a hardware point of view is quite straightforward , just deploy it as a virtual machine. If you are going down the vCenter appliance route – this is your only choice.

Even if you do cover many of the bases when it comes to protection of the vCenter, there are still a few cases where you might loose it , e.g. storage corruption , back end database failure or even worse a catastrophic failure of the management cluster. When things are all going pear shaped , you still want someone to keep an eye on the business while you are fixing it.

This is where System Center really comes to the rescue – because it is an end to end monitoring framework , its going to be looking at the big picture , sometimes from the application stack downwards. The Health of the virtual infrastructure is simply a component of the picture. Letting the corporate central Operations Centre folk keep an eye on things while you concentrate on fixing the root cause of the outage is going to lead to a faster fix all round , with the subject matter experts doing what they do best !

However , if vCenter is down – how can we monitor the estate ? None of the tools will connect to a web service that isn’t there. With the Veeam Management Pack, we can make use of the Recovery Action feature and some PowerShell , in order to automate our own Veeam Virtualisation Extensions to go and talk directly to the hosts if they can’t talk to vCenter. Lets walk through an example.

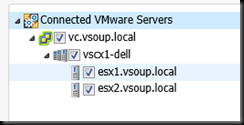

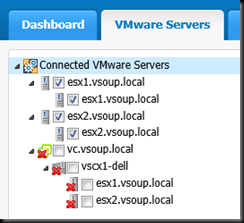

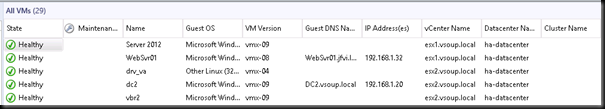

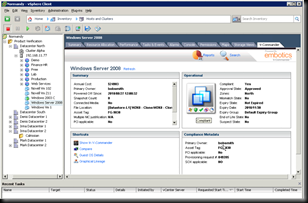

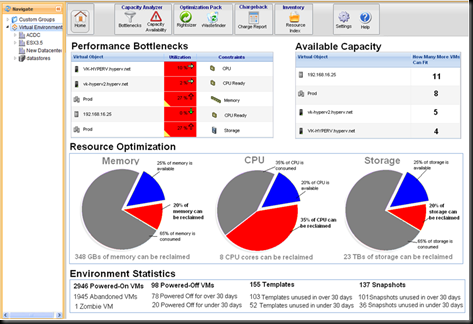

Here is my little test setup – I have a couple of vCenters , with a few hosts and VM’s underneath. I’m monitoring it in SCOM 2012 quite happily.

The Veeam Extensions service is merrily talking away to vCenter, bringing in events , metrics and topology information.

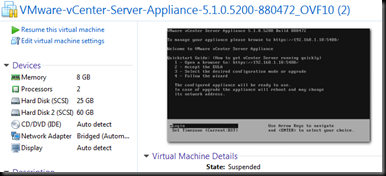

Merrily that is until ( fanfare : Dun dun Dah! ) disaster strikes, or in my case I suspend my vCenter appliance.

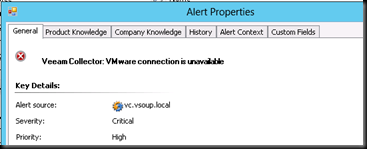

At this point with the standard version of the management pack, You would see the following alert & you would be left without vSphere level monitoring until you could bring vCenter back to life. No host hardware information , no datastore health.

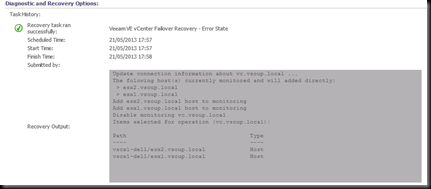

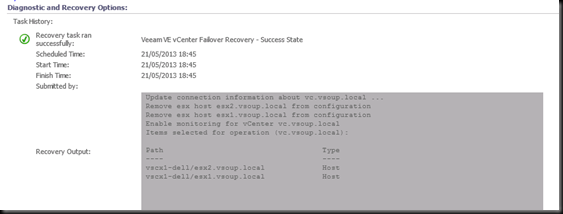

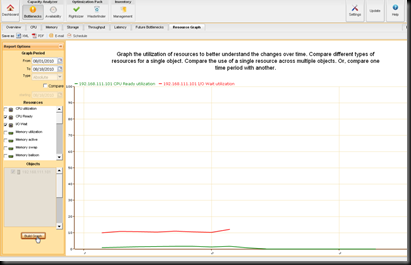

I have managed to persuade the R&D Wizards at Veeam to let me in on a very sneak preview of some upcoming functionality that should be appearing in the not to distant future. In the improved version , the alert above will trigger a Recovery Action . This action will run a PowerShell script to change connection points from the vCenter , to the hosts directly. SCOM has been configured with a credential profile for a root level account on each host.

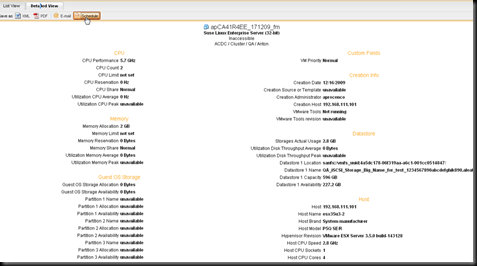

Once this script is complete , the VESS connection looks like this. The vCenter connection has been disabled (unchecked) – no harm in that, as it’s offline anyway. And direct-to-Host connections have been automatically created by the monitor Recovery Action, using our PowerShell interface.

A short while afterwards , this change is reflected in the SCOM Topology.

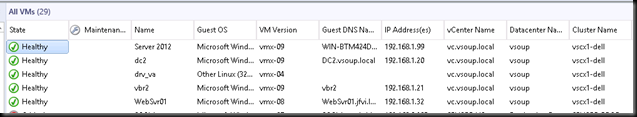

note how we are looking at the hosts as individuals. Without vCenter , there isn’t any vmotion so virtual machines will remain on the hosts they started when vCenter became unavailable. Monitoring teams can continue to keep an eye on the health of virtual machines and hosts for the duration of the outage.

Once vCenter is available again , the collectors run the recovery action in reverse in order to resume monitoring via vCenter.

Notice how the vCenter & datacenter names for the virtual machines have changed back.

As an added bonus , we are able to execute tasks on underlying virtual machines even when vCenter is not available ( such as power on / power off ) – giving us the ability not only to look at the environment , but administer it , even when the centralised administration function is not available. Admins can control power states and manage snapshots without having to manually connect to each host in turn. The rest of the System Center suite has no dependency on vCenter either , The Veeam MP is able to drive data into System Center Orchestrator and System Center Service Manager to maintain host / vm CMDB.

By using Microsoft System Center Operations Manager 2012 , along with Powershell automation , we are enabling an Enterprise systems management team to continue to function during periods of vCenter outage. Keep watching for further releases and watch those SPoF’s !

![f09cb019-7f5b-4171-ae39-588eaadc1429[6] f09cb019-7f5b-4171-ae39-588eaadc1429[6]](http://jfvi.co.uk/wp-content/uploads/2010/11/f09cb0197f5b4171ae39588eaadc14296_thumb.png)

LinkedIn

LinkedIn Twitter

Twitter