There is no shortage of information online and across social media about the Veeam products. They market them well and have a good following because they are pretty reliable , cost-effective and well, really quite good 🙂

What there seems to be a lack of is some good case study in the public domain about usage outside a demo lab – we’ve all seem the Demos where it takes a backup in the blink of an eye, restores it then makes you a cup of tea before finding your favourite channel on sky and recording that film you where about to miss ( dramatisation : may not actually make you tea! )

I’ve recently been through a project to implement the Veeam backup product in our non-production environment. To give you a little bit of background, this has recently been migrated over the Atlantic from a datacenter where Netbackup was king. Moving to a “fresh” environment , previously only used for disaster recovery allowed us to pick a new product that would offer the level of protection we required for the most effective use of resources.

Onto the workload – the workload consists of over 400 VM’s across 2 clusters, both attached to fibre channel storage. Source data size is currently over 40Tb , with expectations to grow beyond 50 in the not too distant future. being a pre-production environment the virtual machines themselves run a lot of application & sql servers in addition to 2 complete AD forests with separate resource domains.

While at the proof of concept stage I had zero budget to spend on hardware so evaluated the product in 2 different ways , on an elderly physical server I’d scrounged & as a Virtual Machine running in appliance mode. This enabled me to see what pitfalls I’d come across as I progressed.

The brief for protection was to be able to capture a minimum of 14 days of backups , with no long term retention required ( these environments are often rebuilt so long term storage wasn’t a consideration at this stage )

After building the PoC machines and installing the application ( as many other people have said , this is very straight forward ) – I was able to start taking backups pretty much straight away on the Virtual Appliance. The Physical backup proxy needed some configuration at the SAN end in order to be able to suitably zone the HBA to the same LUN’s as presented to the Source cluster – with a mix of storage vendors this was … interesting )Its also vital that you open up a diskpart prompt and prevent auto mounting of volumes – automount disable and automount scrub are the commands to use, followed by a reboot.

Wanting to get backups up and running as soon as possible I simply created a single job per server – at the time this was around 100Vm’s per proxy , by using the hosts themselves as the selection criteria for the job. This would ensure a “fail-safe” backup. If someone deployed a virtual machine into that cluster , then it would be backed up no matter what. With a relatively low count of source machines per proxy , this wasn’t an issue as I was just about to get the backups complete inside a 24 hours window , after the initial pain of first backup.

The proof of concept machines churned away through a couple of months ( with the help of an extended trial licence kindly provided by our account team at Veeam ) but as more and more machines were migrated into the environment , the time taken for backups got longer and longer , until the jobs where taking an unreasonable time to complete ( in excess of 72 hours). It was time for some performance tuning.

Some of the items on the tune list were within my power , some were not. For a start we had no concurrency in the backup streams , so even though I’d put up a pretty powerful virtual machine as an appliance , we were not seeing the benefit. This would have to be addressed by a fundamental change in job design , which I will come to later on. On the physical proxy side , the hardware I’d been able to scrounge was a pretty elderly DL380G3 , with a couple of CPU’s – also not up to running multiple jobs. Due to some logistics issues, we also had a less than ideal fabric configuration which was affecting data transfer rates between the source and target – this would have to wait for the storage team to fix.

I knew that for the application to move to the production stage, I’d have to make some significant changes. I started by changing my initial plan to deploy multiple proxies as virtual appliances as at the time there seemed to be a few teething problems with the virtual appliance letting go of allocated disks & committing the snapshots taken during backup. This meant that I’d had to keep a very close eye on snapshot creation & manually release any volumes each morning, not a task that would be acceptable in a production system. I’m happy to say just before I stopped using the virtual appliance , an update from Veeam to version 4.1.2 resolved these issues, which may have been a part of how the hotadd functionality is implemented within Vmware. A virtual appliance still remains part of the final design , but more as an emergency box. The design went to using 2 new blades as backup proxies – the power of the BL460G6 would be more than enough to allow us to run many overlapping jobs.

From conversations with our systems engineer at Veeam & a little research on their forums, It seems that you don’t really want to run backup jobs with more than about 2TB of source machines in place. For the first cluster , that meant that the new servers would need 11 jobs to backup all the source VM’s. The division of these jobs was a bit of a concern initially and required a little housekeeping within the virtual infrastructure. VM’s were organised by application group ( clearly defined in our internal CMDB ) into subfolders underneath the SLA. I then created the jobs , selecting folder in alphabetical order until the load was around 2Tb, where I knew an application expected significant growth I left space in the job.If we had organised our vm’s by Application using something like Business View , it would have been nice to have that business view integrate with the application, even nicer if it woudl have auto provisioned the jobs based on the folders. By using the vm folders as part of the job selection the jobs will adjust to new VM’s providing they are put into the folders of course! I coudl have done this with resource pools or vApps , but as we operate a flat landscape from a resource pool point of view , this wasn’t needed.

Due to being new job setups ( on new boxes ) the pain of building a full backup had to be done. In my own impatience I set too many off at once and made a bad discovery. What I had been told were nice new blades were in fact hand-me-downs with only a pair of dual core CPU’s. Trying to run 8 concurrent jobs made it sweat a bit, but after a couple of days where where all done. The jobs now fire off at 2 hour intervals during the day and take 2-4 hours to complete. This eases up the load on the host , completes the entire backup within a 24 hour window & gives a restore window for each job , which was previously not possible. I’ve recently repeated the process with the second server and cluster , which is just about to finish off its full backups but I think its safe to say its a pretty busy little setup!

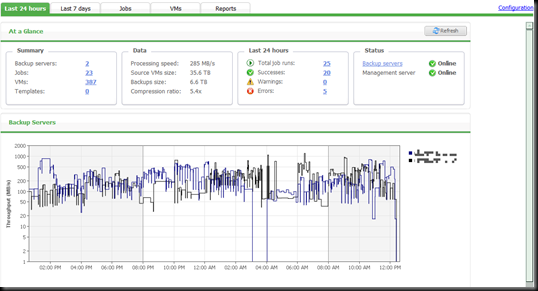

The trace with the black line is the server building up its full backups as we speak , so the counters are a little down – there are a good few more VM’s to add ! I’m looking forward to upgrading this particular setup to v5 and putting a little vPower into the environment 🙂

What I learned about my Veeam Deployment.

- Split your jobs up into bite sized chunks – you’ll complete your backups better and be able to restore when you want.

- Its not always the size of the dataset that dictates the time taken to backup. The number of VM’s will have a significant impact due to the overhead of cbt analysis and the snapshot management side.

- When you deploy more than one proxy – make sure you deploy Enterprise manager – not only does it produce some easy to view reports , but provides a very handy way for application teams to see if a VM is being backed up or not.

- When building your full backup set – don’t kick the jobs off all at once

- If you do plan on concurrent backup streams then make sure you have plenty of cores available.

LinkedIn

LinkedIn Twitter

Twitter