I’ve been trying to get some work done towards my VCDX qualification for some time. With the first part of the qualification , the Enterprise Admin exam booked for April I felt it was a good time to get pen to paper and make a few study notes along the way.

If you are considering your VCDX qualification in the not too distant future , then check out the “Brown Bag” sessions – an idea conceived by Tom Howarth of planetvm.net and Cody Bunch of professionalvmware.com . Tom and Cody’s posts have inspired me to blog some of my study notes , hopefully this will make it stick for me , and if its a point to improove , it might just stick with you too…

Working in a 100% Fibre Channel shop at the moment I dont get much day to day exposure to iSCSI storage technology , so have always felt it as a slight weak point in my skillset. As a result it seemed the natural choice to start my Command Line revision with.

Scenario/AIM

I have a spare host that I’m planning to use for this ‘mini-lab’ – it currently has plenty of space on a local datastore. Its also running ESX 3.5 Update 5

The Aim is to install a VM to provide some virtual storage , which I’m going to present back to the host in 2 forms, an iSCSI datastore and also a regular share via NFS ( could be handy for hosting build ISO files on at some point ! )

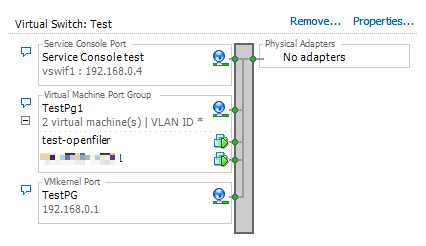

As I dont really want anything else connecting to my test environment I shall be hosting the storage on an internal vSwitch with no uplinks , of course in a ‘real’ environment you would use a regular vswitch.

In order for the Service Console and VMKernel to be aware of the storage VM I will need to connect a service console and vmkernel port to the internal vswitch as well as a NIC from an administrative VM in order to be able to set the storage up.

I’ve chosen to use Openfiler for the storage VM – in previous lives , I have used virtualised FreeNAS boxes but openfiler has a good level of community support and well docuemtned setup guides for use with ESX.

I wont reproduce the guides for the openfiler config word for word here , but Instead link you to Simon Seagrave’s excellent guide for openfiler setup and config , at http://www.techhead.co.uk/how-to-configure-openfiler-v23-iscsi-storage-for-use-with-vmware-esx

As well as presenting a 5GB LUN for iSCSI I have also enabled the NFS service on the Openfiler appliance and created a 4GB shared volume for use with NFS. I’m also aiming to complete the tasks just using an SSH session to the host. I’ll log into the VI client to verify things though.

Network Setup.

Create test internal switch :

esxcfg-vswitch -a Test

Create test internal port group :

esxcfg-vswitch Test -A TestPG

Add VMKernel Port:

esxcfg-vmknic -a -I 192.168.0.1 -n 255.255.255.0 -m 9000 TestVMK

Add Service Console Port:

esxcfg-vswif -a vswif1 -p “TestPG” -i 192.168.0.4 -n 255.255.255.0

after connecting my admin VM to the vswitch , I end up with a switch looking like this :

The easy step is to mount the NFS store , this is done with the esxcfg-nas command as follows

Esxcfg-nas -a TEST -o hostname 192.168.0.2 /mnt/nfs/testnfs/TEST/

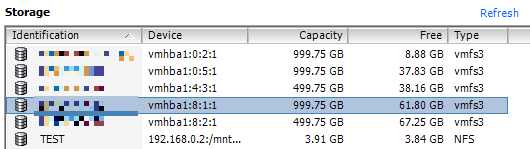

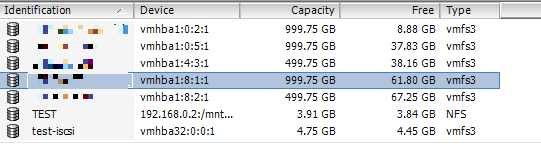

A quick check back to the VI client shows the NFS datastore ( small as it is ) ready for use.

( yes i know one of those datastores is dangerously full 🙂 )

enabling the iSCSI connection is going to take a few more commands. Not only do we have to enable the software iSCSI initiator , but we have to partition the LUN then format the VMFS datastore.

first things first – enable the software initiator.

Esxcfg-swiscsi -e

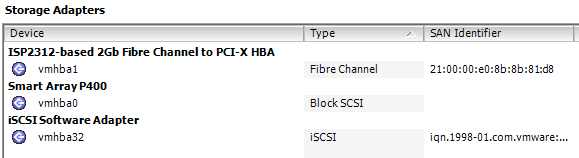

this will add an extra vmhba to the host , as I’m running ESX 3.5 , that is always going to be called vmhba32. If I was running ESX 3.0 it would have been vmhba40 .

I then need to connect the vmhba to the target device , note the -D switch is to set the Dynamic Discovery mode.

Vmkiscsi-tool -D -a 192.168.0.2 vmhba32

Once this is done , scan the hba for targets , this can take a few moments to complete.

Esxcfg-swiscsi -s

Where does this leave us – we have a target LUN presented to the host , but no partitions on it. From the VI client this is quite easy to create a datastore on it , but this isn’t about doing things the easy way 🙂

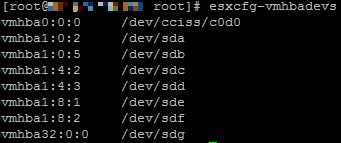

you’ll need to create a partition on the disk, but which disk ?

issuing the following command gives me this..

the service console has assigned /dev/sdg to the disk , which we can then partiton with fdisk

fdisk /dev/sdg

‘n’ to create a new partition then accept the defaults.

however we’re not out of the woods yet. the disk type needs to be changed to vmfs

hit ‘t’ to change the partition ID , then enter ‘fb’ for VMware VMFS. Finally hit W to write the changes to disk.

now we have a partition of the correct type , we can format a VMFS datastore on the partition

vmkfstools -C vmfs3 -S test-iscsi /vmfs/devices/disks/vmhba32:0:0:1

refrshing storage on the VI client shows the datastore sitting happily there.

I hope this has been helpful – its certianly been a good fresher for myself , not having playing with iSCSI since my VCP 3.5 revision. all comments are welcome here or on twitter ( @chrisdearden )

« How do you arrange yours ? Its not Everyday I win something , and it turns up! »

LinkedIn

LinkedIn Twitter

Twitter

[…] iSCSI Management from the CLI (JFVI) […]