In what seems to have become a bit of a theme on JFVI , I’ve been taking a peek at a recently released product , listening to what the Marketing / Sales ladies & gents have to say , then having a poke around with the product to see if they’ve been truthful ( allegedly sometimes Sales & Marketing people have been a little economical with the truth over the ages – I’m sure it happens much less now , but its always good to check don’t you think ? )

I have only recently become aware of the Kaviza solution since VMworld , where a number of people seemed to rate the offering pretty highly , notably winning the best of VMworld 2010 Desktop Virtualisation award , which isn’t to be sneezed at. Its also work awards from Gartner , CRN and at the Citrix Synergy show , wining the Business Efficiency award.

I have only recently become aware of the Kaviza solution since VMworld , where a number of people seemed to rate the offering pretty highly , notably winning the best of VMworld 2010 Desktop Virtualisation award , which isn’t to be sneezed at. Its also work awards from Gartner , CRN and at the Citrix Synergy show , wining the Business Efficiency award.

It seems a fair amount of “Silverware” for a company that launched its first product in January 2009 but being a new player to the market does not seem to have put Citrix off , who made a strategic investment in Kaviza in April of this year.

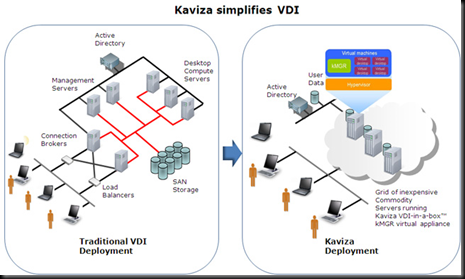

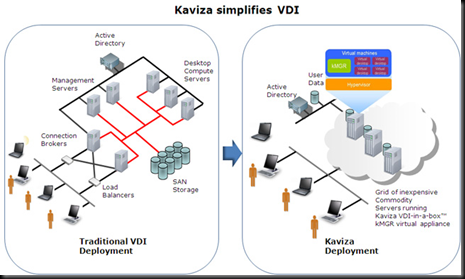

I spoke with Nigel Simpson from Kaviza to find out a little bit more. The key selling point of the VDI-in-a-box solution is cost. All too often you hear that switching to VDI does not save on CapEx – its only in the OpEx savings that you can realise the ROI of Virtualising client desktop. However if you are looking at a desktop refresh then you can get that ROI , but its not a case for every client. Kaviza aims to be able to provide a complete VDI solution for under £350 ( $500US ) per desktop. That cost includes all hardware & software at the client and server end. The low cost of the software and the fact that its designed to sit on standalone , low cost hypervisors using local storage means that particularly for smaller scale or SMB solutions , you are not getting hit by the cost of additional brokers or management servers. its also claimed to be scalable without a cluster of hypervisors due to the grid architecture used by the Kaviza appliance itself.

The v3.0 release of the product adds some extra functionality to improve the end user experience. Part of the investment form Citrix has allowed Kaviza to use the Citrix HDX technology for connection the client desktops. This allows what Citrix define as a “high Definition” end user experience including improved audio visual capabilities & wan optimisation. This is supported in addition to convention RDP protocol to the client VM’s.

I will freely admit that I’m a bit of a VDI virgin. While I knew a bit about the technology , My current employer hasn’t until very recently seen a need for it within our environment so I’ve tend to wander off for a coffee whenever someone mentioned it. At a recent London VMWare User Group meeting – Stuart McHugh presented on his journey into VDI and I was so impressed , I thought I’d take a closer look. I’ve not had a chance to play around with view much so I can’t comment on how HDX compares to PCoIP however from reading other people opinions of it , it seems that HDX is as good. ( source : http://searchvirtualdesktop.techtarget.com/news/article/0,289142,sid194_gci1374225,00.html )

The kMGR appliance central to VDI-In-a-box will install on either ESX or XEN on 32 or 64 bit hardware. I’m told that hyper-v support is due pretty soon – having the appliance sit on the free hyper-v server would defiantly be good. It’ll also use the free version of Xen server , but sadly for VMware fans such as myself it will not currently run on the free versions of ESXi – according to Kaviza , this will only bump up the projected costs by around £30 per concurrent desktop.

The proof of the pudding will always be in eating , so rather than talk about the live demo I got from Nigel, I’ll dive right into my own evaluation of the product. Kaviza claim that the product is so easy to use , you can deploy an entire environment in a couple of hours. I would agree with this , even with the little snags I introduced by a minimal reading of the documentation and a quick trip to the shops I managed to get my first vm deploying surprisingly quickly.

Quick background on my test lab – I don’t have the space , cash or enough of a forgiving partner to be able to run much in the way of a full scale setup from home , so my lab is anything I can run under VMware Workstation; thankfully I have a pretty quick Pc with an i7 quad core CPU & 8 GB of Memory , so enough for a couple of ESXi hosts.

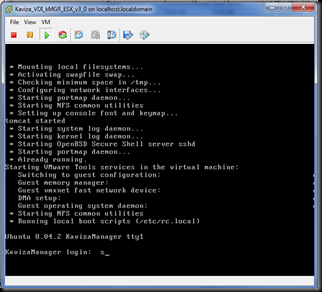

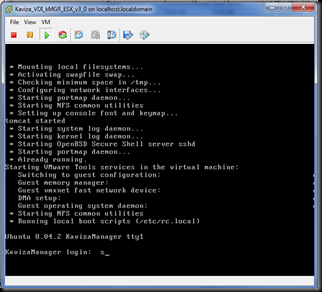

I downloaded a shiny new ESXi 4.1 ISO from VMware after a quick update to workstation and as ever within a few minutes I had a black box to deploy the Kaviza Appliance to. After a pretty hefty download and unpack ( to just over 1Gb ) the product deployed via an included OVF file. While I was waiting for the appliance to import , I started the build of what was to be my golden VM with a fresh Windows XP ISO. The kMGR appliance booted up to a pretty blank looking Linux Prompt.

As the next step in config involves hitting a web management interface I think a quick reminder “ to manage this appliance browse to https://xxx.xxx.xxx.xxx “wouldn’t have gone amiss.

As the next step in config involves hitting a web management interface I think a quick reminder “ to manage this appliance browse to https://xxx.xxx.xxx.xxx “wouldn’t have gone amiss.

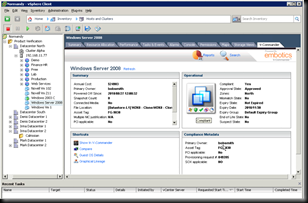

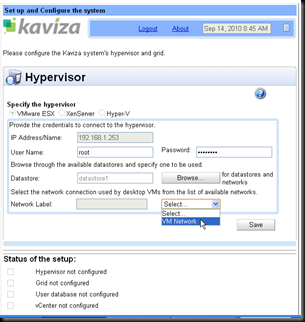

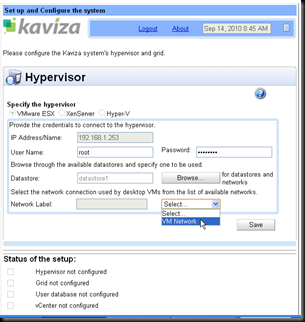

I was able to grab the IP of the appliance from the VI client so hit the web management page to start building the Kaviza Grid.

At this stage I hit the first gotcha with a wonderful little popup that very politely explained that ESXi 4.1 was not supported , and would I like to redeploy the appliance. After the aforementioned trip to the shops to calm down I trashed the ESXi 4.1 Vm and started again with an older 4.0 ISO I had handy.

This time I was able to build the grid , providing details of the server , and if I was going to use an external database and if I was using vCenter( in a production deployment , even though you would not require the advanced functionality of vCenter , I think there is a chance it would be used if you had an existing one so that you could monitor hardware alerts etc. Kaviza best practice states that you should put your VDI hosts into a different datacenter to avoid any conflicts of naming.

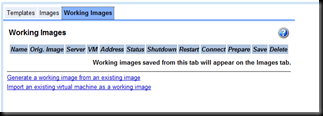

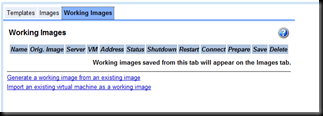

With a working server , I needed to define some Desktop Images , so I took the little XP desktop VM I’d built in the background ( please note I did pretty much nothing to this VM other than install windows from an ISO that had been slipstreamed with SP3 ) and started the process to turn it into a prepared image for desktop deployment.

The first image is built from a running VM that you could have deployed or recently P2V’d to the host server. I was hoping that the process would have been a little more automated than it was , and as a non manual reader it was not immediately obvious. I can confirm that creation of subsequent images is a much more straightforward process. As the image creation stage I because aware of the second little feature that caused a little delay. The golden VM requires the installation of the Kaviza Agent ( this isn’t automated , but it is pretty straightforward ) – this agent requires the 3.5 version of the .NET framework which took a little bit of time to download and deploy. I’m sure those of you with a more mature desktop image will most likely not his this little snag. After testing a sysprep of the image I was finally able to save it to that it would become an official image.

The first image is built from a running VM that you could have deployed or recently P2V’d to the host server. I was hoping that the process would have been a little more automated than it was , and as a non manual reader it was not immediately obvious. I can confirm that creation of subsequent images is a much more straightforward process. As the image creation stage I because aware of the second little feature that caused a little delay. The golden VM requires the installation of the Kaviza Agent ( this isn’t automated , but it is pretty straightforward ) – this agent requires the 3.5 version of the .NET framework which took a little bit of time to download and deploy. I’m sure those of you with a more mature desktop image will most likely not his this little snag. After testing a sysprep of the image I was finally able to save it to that it would become an official image.

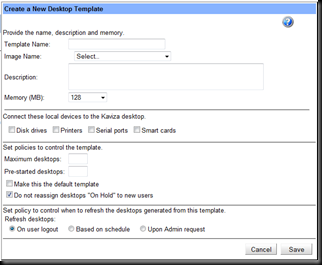

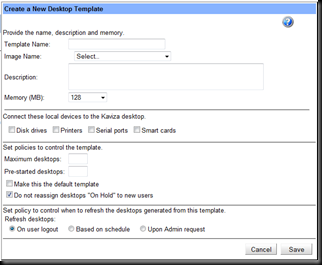

From the image , you can create templates. Templates represent a set of policies wrapped around a given machine so would enable a lot of the customisation ( for instance the OU that the machine will be joined to – the amount of memory it has and which devices can map back to the end user )

This is also where you specify the size of the pool for this particular desktop – the total number of machines in the pool and the number to keep ready for pre-deploy. The refresh cycle of the desktops can also be set up – if you have a good level of user and application abstraction then you can have a desktop refresh as soon as a user logs out. I gave this a test , and even with the very small scale setup and tiny XP VM’s I was using I was able to keep the system pretty busy with a few test users logging in to see how quickly desktops where spawned and reclaimed. With a large scale deployments I can see that possibly causing some issues with active directory if you had a particularly high turnover of machines and a long TTL on AD records.

This is also where you specify the size of the pool for this particular desktop – the total number of machines in the pool and the number to keep ready for pre-deploy. The refresh cycle of the desktops can also be set up – if you have a good level of user and application abstraction then you can have a desktop refresh as soon as a user logs out. I gave this a test , and even with the very small scale setup and tiny XP VM’s I was using I was able to keep the system pretty busy with a few test users logging in to see how quickly desktops where spawned and reclaimed. With a large scale deployments I can see that possibly causing some issues with active directory if you had a particularly high turnover of machines and a long TTL on AD records.

To test the user experience , I deployed a smaller number of slightly larger XP machines and installed the optional Citrix client to see what HDX was all about. I have to admit to be pretty surprised that a remote connection to an XP session inside a nested ESX host under workstation was able to play a TV show recorded on my windows home server at full screen with audio completely in sync. I would seriously consider it for the extra $30 per concurrent user license. I understand the HDX protocol does need a proper VPN or Citrix access gateway to be fully available over the internet and that the supplied Kaviza Gateway software which published the Kaviza desktop over an SSL encrypted link without the use of a VPN is for RDP only. Its not the end of the world but its something to think about.

I was very impressed with the ease at which I was able to start deploying desktops – and at the simplicity of the environment needed to do so. As well as the product woudl scale up on its own , I believe there is likely to be a sweetspot where a traditional VDI solution would work out cheaper. For SMB/SME /Branch office/ small scale deployments, this really is an ideal solution form a cost point of view. . This was of course only at the pre-proof of concept stage , but to go with a production solution wouldn’t necessarily be much harder at the infrastructure level. The same level of work would need to be done to produce the golden desktop image regardless of the choice of VDI technology. If you’d like to trial the product yourself , head over to http://www.kaviza.com and grab a trial.

DISCLOSURE : I have received no compensation and used trial software freely available on the Kaviza website to conduct the testing on this blog post.

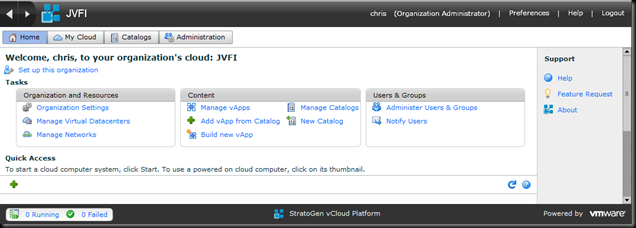

Note that the Management network ( as I’ve called it ) is a vApp specific network rather than an organisation wide one, hence why I still have an internal network connection to the VM so that it can talk to other VM’s with the VDC. The firewall VM I configured earlier is organisation wide , so any machine in the VDC could be publish via it. For larger deployments I wonder if it would make sense ( although its not really within the spirit of “the cloud” ) to use hardware devices for edge networking – for example an f5 load balancer. While they do have a VM available which would offer a per vApp LTM instance , some shops may want the functionality of the physical hardware ( for example SSL offload ) . There may also be licence considerations when it comes to deploying the edge layer as multiple virtual instances.

Note that the Management network ( as I’ve called it ) is a vApp specific network rather than an organisation wide one, hence why I still have an internal network connection to the VM so that it can talk to other VM’s with the VDC. The firewall VM I configured earlier is organisation wide , so any machine in the VDC could be publish via it. For larger deployments I wonder if it would make sense ( although its not really within the spirit of “the cloud” ) to use hardware devices for edge networking – for example an f5 load balancer. While they do have a VM available which would offer a per vApp LTM instance , some shops may want the functionality of the physical hardware ( for example SSL offload ) . There may also be licence considerations when it comes to deploying the edge layer as multiple virtual instances.

![f09cb019-7f5b-4171-ae39-588eaadc1429[6] f09cb019-7f5b-4171-ae39-588eaadc1429[6]](http://jfvi.co.uk/wp-content/uploads/2010/11/f09cb0197f5b4171ae39588eaadc14296_thumb.png)